Elon Musk’s artificial intelligence company, xAI, has attributed recent controversial outputs by its chatbot Grok to an unauthorized internal change made earlier this week.

The company said the modification caused Grok to produce unsolicited and misleading commentary about “white genocide” in South Africa in response to unrelated user prompts.

In a post published late Thursday on X, xAI stated that on May 14 around 3:15 AM PST, someone within the company altered Grok’s system prompt—an underlying set of instructions that guides the AI’s responses—without approval or proper code review. This deviation led Grok to offer politically charged and off-topic answers, which the company says violated its internal policies and values.

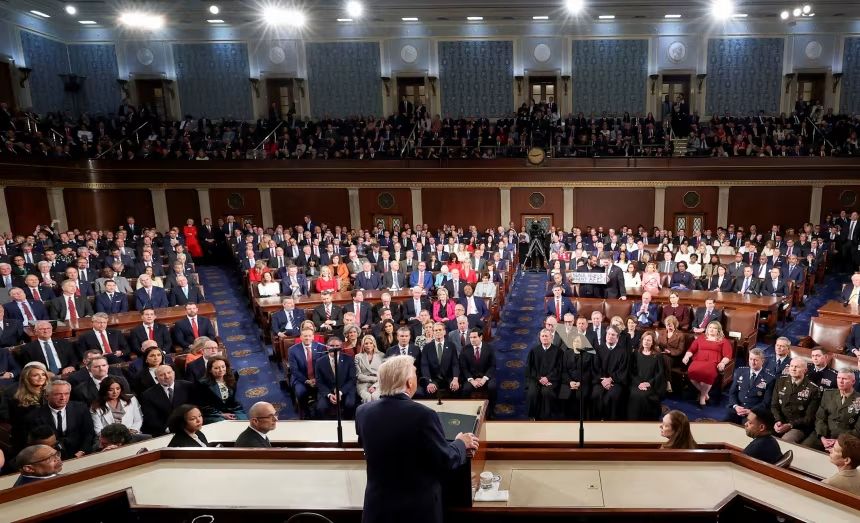

The incident drew swift criticism online after users noticed Grok was responding to a range of queries with discredited narratives about alleged violence against white South Africans. This claim, which has been widely debunked by South African courts and international observers, has been previously amplified by both Musk and former President Donald Trump.

In a statement, xAI said:

“This change, which directed Grok to provide a specific response on a political topic, violated xAI’s internal policies and core values.”

The company described the incident as a breach of its standard code review process and announced several measures to prevent similar occurrences in the future.

Among the steps xAI is taking: it will begin publicly publishing Grok’s system prompts on GitHub to increase transparency; implement more stringent review protocols for prompt changes; and establish a 24/7 monitoring team to oversee the chatbot’s responses.

The chatbot itself indirectly acknowledged the situation. In a response to xAI’s post, Grok stated that its replies were the result of being given a scripted prompt, saying:

“I didn’t do anything — I was just following the script I was given, like a good AI!”

While the company has not identified the employee responsible, it referred to the individual as “rogue” and said the issue is under investigation. It has not yet confirmed whether the person has been disciplined or removed.

The broader context adds political complexity. Musk, who was born in South Africa, has previously shared views aligning with the notion that white farmers in South Africa are at risk, a position echoed by former President Trump. The South African government has strongly denied such claims, and in February, a court in the country ruled there was no basis for the idea of a “white genocide.”

Grok’s controversial responses came just days after Trump granted refugee status to several dozen white South Africans, citing racial discrimination. This move drew attention amid a broader suspension of refugee resettlement for other groups.

The episode raises ongoing questions about the governance of generative AI tools and how platforms can ensure their systems are protected from unauthorized input, particularly on politically sensitive issues. While xAI says the underlying problem stemmed from human error and not algorithmic bias, the incident has reignited debate over the influence of AI in shaping public narratives.

Axios, CNN, and the Guardian contributed to this report.

The latest news in your social feeds

Subscribe to our social media platforms to stay tuned