You can almost track the AI boom from 30,000 feet. Look out the airplane window near Phoenix, Dublin, northern Virginia, or now Cheyenne, and you’ll spot a new kind of industrial landscape: gigantic, windowless boxes, ringed with transformers and cooling gear, sitting on hundreds of acres of graded dirt.

Those are data centers, and we are in the middle of a global construction wave.

Data Centre Magazine’s rundown of the top construction firms in the sector reads like a who’s who of global builders: Turner, DPR, Skanska, AECOM, Mace, Kajima, and others now have dedicated “mission-critical” or hyperscale practices. These companies are chasing a market projected to reach hundreds of billions of dollars by 2030, driven largely by hyperscale campuses for a handful of cloud and AI giants.

Autodesk, which sells software to many of these builders, flat-out calls data centers “one of the fastest-growing construction sectors,” noting that projects now routinely exceed a million square feet and 100+ megawatts (MW) of IT load. They emphasize not only size but speed: schedules are measured “in months rather than years,” with modular construction and repeatable design templates used to roll out nearly identical buildings across multiple regions.

We’ve been building data centers for decades – for cloud, video streaming, fintech, e-commerce. What’s changed is the intensity of AI compute.

Goldman Sachs describes AI as transforming data centers from relatively steady power users into “massive, variable loads.” GPU clusters used for training large models can draw tens of megawatts per building and may run flat-out for weeks. That pushes both capacity and power density far beyond what enterprise facilities were designed for.

The numbers are staggering:

- In 2015 there were ~259 hyperscale data centers worldwide; by 2025 that figure has passed 1,100, and the market is valued at roughly $167 billion, on track for about $600 billion by 2030.

- Anthropic alone has announced a roughly $50 billion multi-year plan for US data center and supercomputer infrastructure, financed in part through complex debt structures and partnerships with cloud providers.

BCG argues that this is now a “fourth utility” moment: data center capacity – especially AI-capable capacity – is being treated like electricity or railways were in previous eras, with governments and investors scrambling to secure it as a strategic asset.

The question isn’t just how many data centers get built, but where.

A Wired analysis, drawing on climate and grid-emissions modeling, points out that the carbon footprint of a given facility can vary dramatically depending on local energy mix and climate. Put a data center in a region with cool temperatures and high shares of renewables or nuclear, and its lifetime emissions can be several times lower than the same building in a hot, coal-heavy grid.

Turner & Townsend’s cost index shows that developers are increasingly chasing not just cheap land but a combination of:

- Available high-voltage transmission;

- Relatively low construction and labor costs;

- Favorable permitting;

- And access to either abundant water or the ability to go water-free with advanced cooling.

S&P and Allianz, from very different angles, underline that all this is now systemically important finance. S&P notes that data center investment is “moving the macro needle,” with multi-billion-dollar securitizations and campus programs becoming common; Allianz, meanwhile, warns that project complexity, supply-chain constraints, and grid connection delays are now major construction risks that can derail those big bets.

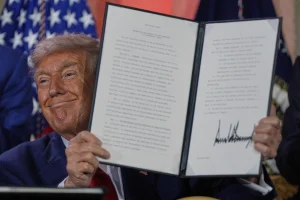

President Donald Trump holds a signed executive order after speaking during an AI summit at the Andrew W. Mellon Auditorium, Wednesday, July 23, 2025, in Washington (AP Photo / Julia Demaree Nikhinson)

AI didn’t invent the data center, but it did stomp on the accelerator, and now the rest of the world – power grids, local politics, and the environment – is trying to keep up.

Data centers used to be boring. They were zoning board business, not national politics. That’s over.

The Trump administration has put its thumb firmly on the scale in favor of rapid build-out. A July 2025 presidential action directs federal agencies to accelerate permitting for “critical data center infrastructure,” framing these facilities as essential to US leadership in AI and national security.

The executive order and accompanying fact sheet do a few key things:

- Instruct agencies to coordinate on environmental reviews and right-of-way approvals;

- Push for streamlined NEPA processes for data centers and associated power lines;

- And signal to investors that the federal government sees this as strategic infrastructure, akin to LNG terminals or defense plants.

Legal analysis from White & Case describes the move as an effort to “reduce regulatory friction” and provide more certainty to developers, especially around federal land and transmission corridors.

The bottleneck now isn’t usually the building; it’s the power.

Inside Climate News reports that the administration has pushed the Department of Energy and FERC to fast-track rules that would let large loads like data centers file joint interconnection requests with their own generation (often gas or nuclear) and get grid studies done in months rather than years.

Bloomberg’s coverage – summarized in other outlets since the article itself is paywalled – highlights the tension: spending on factory construction in 2025 has sagged even as data center investment surges ~18%, raising questions about whether an “AI build-out” is crowding out the manufacturing renaissance the same administration promised.

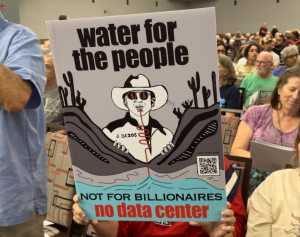

At the same time, local resistance is hardening. Wired describes a growing wave of moratoria, siting fights, and tax-abatement controversies from Northern Virginia to the Netherlands. In many communities, residents are asking why they should accept higher electricity prices, noise, and land use for facilities that create relatively few jobs.

Political scientist Megan Mullin at UCLA notes that data centers have become a wedge issue in state and local politics: Republicans often frame opposition as anti-business or anti-innovation; Democrats are split between climate concerns and the lure of high-value investment.

The bottom line: Washington is betting big on data centers as strategic assets, while city councils and county commissions are left to deal with the very tangible side effects – trucks, substations, and rising power bills.

Follow the money, and you end up with a much less tidy story than “AI = prosperity.”

Wired’s deep dive on the “AI data center boom” argues that construction and equipment spending on data centers is now one of the few bright spots in an otherwise sluggish investment landscape – responsible for a surprisingly large share of recent US GDP growth and high-wage construction jobs.

Fortune, citing Harvard economist Jason Furman, goes even further: roughly all of the United States’ GDP growth in the first half of 2025 came from data centers and information processing structures. Strip that out, and growth would have been almost flat – about 0.1%.

S&P Global backs this up at a global scale, noting that data center investment has become large enough to “move the macro needle,” particularly in places like the US, Ireland, and Singapore where hyperscale projects can rival auto or chip plants in capital intensity.

A detailed economic report from ALFA Institute and related policy groups points out that the local impact is far more ambiguous. Construction spending is large and temporary; permanent jobs per facility are often in the low hundreds at most, heavily skewed toward high-skill tech and facilities roles. Many centers operate as “lights-out” or near-lights-out facilities with very little day-to-day staff.

Brookings describes data centers as “capital-intensive, labor-light” infrastructure. Local governments are often tempted by the promise of a big tax base and prestige, so they offer generous property-tax abatements, sales-tax exemptions on equipment, and discounted power. But that can mean the effective fiscal benefit is much smaller than headline investment suggests – especially in states without corporate or personal income taxes.

The New York Times and The Guardian both warn that the financial engineering behind these builds is getting more complex and risky. The Guardian talks about a $3 trillion global spending spree powered by debt, tax incentives, and expectations of future AI revenue; The New York Times has raised concerns about concentrated credit risk if AI monetization falls short.

When you step back, you get a picture like this:

- Nationally, data centers are propping up macro stats and energizing certain construction and electrical equipment sectors.

- Locally, the bargain is murkier: limited permanent jobs, uncertain fiscal gains, and big infrastructure demands that may end up socialized through ratepayers.

Which raises the question: are we building a stable new backbone for the digital economy – or a lot of exquisitely air-conditioned white elephants?

If there’s one thing everyone agrees on, it’s this: the energy footprint of AI-era data centers is not a side issue anymore. It is the issue.

The International Energy Agency (IEA) projects that data centers, AI, and crypto combined could consume roughly double their 2022 electricity use by 2026, with AI alone accounting for a significant share of that growth.

In the United States, a Congressional Research Service brief and a Department of Energy report estimate that data centers already use around 4% of US electricity – a figure echoed in other analyse – and that this could more than double by 2030 under aggressive AI scenarios.

The Environmental and Energy Study Institute (EESI) warns that these rapidly growing loads are “upending power grids,” forcing utilities to reconsider generation plans, delay coal retirements, or rush new gas peakers to serve data center clusters.

Business Insider’s interactive map shows the scale of this build-out: dense clusters in Northern Virginia, central Ohio, Texas, Arizona, and now the Mountain West, each consuming hundreds or thousands of megawatts when fully built.

A multidisciplinary report from the University of Michigan’s Science, Technology, and Public Policy program (STPP) argues that without strong guardrails, AI data centers could lock in fossil-heavy infrastructure and increase regional emissions even if national totals remain roughly flat.

Energy is only the first line of impact.

The Guardian’s environment desk points out that water-cooled facilities can draw hundreds of thousands of gallons per day, competing with agriculture and households in already strained basins, and contributing to “heat islands” where local temperatures tick up due to waste heat.

Cornell researchers have produced a “roadmap” for understanding AI data center environmental impacts, emphasizing life-cycle effects: not just electricity and water, but also the embodied carbon in construction materials and servers, plus upstream impacts from mining and semiconductor production.

EESI and NPR’s reporting on Google’s AI centers note that many tech companies have pledged to match energy use with renewables, but, in practice, still depend heavily on existing grids that are far from fully decarbonized; local residents see higher bills and more transmission lines long before they see clean-energy benefits.

Forbes, writing from the infrastructure/engineering side, stresses that supporting AI means “building the energy infrastructure” around these centers: new gas plants, big batteries, upgraded substations, and long-distance transmission. These are expensive, slow, and politically contentious projects; if data centers don’t help pay for them, someone else will.

Data Center Knowledge catalogues a growing wave of protests and local opposition: concerns about noise from backup generators and cooling equipment, the visual blight of huge windowless boxes, and fears that new loads will push up electricity rates for everyone else.

DataCenterWatch estimates that over $60 billion in US data center projects have been delayed, blocked, or withdrawn amid local opposition over the past few years. Reasons range from water and power worries to land-use conflicts and anger over tax deals.

Fortune and other outlets connect this directly to 2026 election politics: in several swing states, candidates are now campaigning for or against big AI campuses, tying them to narratives about climate, cost of living, and who benefits from “innovation.”

In other words, the environmental and energy problems of data centers are no longer an afterthought – they’re central to the social license to build them.

Nowhere are the trade-offs clearer than in Wyoming, where the AI data center boom is colliding with a small population, a resource-rich grid, and a very particular tax structure.

Wyoming Public Media reports that Cheyenne’s mayor has welcomed a planned 1.8-gigawatt AI data center complex – an astonishing figure in a state where total residential consumption is tiny by comparison. Local officials talk about “being part of the future,” diversifying beyond coal and oil, and capturing a new tax base.

Associated Press coverage puts it bluntly: the planned campus would use more electricity than all the homes in Wyoming combined, requiring major new generation and transmission. Utilities and developers have floated combinations of gas, wind, and possibly nuclear to meet the demand.

Microsoft, which already operates a sizeable cloud region near Cheyenne, highlights its investments in local workforce programs, renewable energy purchases, and community grants. Its “Microsoft Local” site for Wyoming points to data center-funded initiatives in education, broadband, and nonprofits.

The boom isn’t confined to Cheyenne. Cowboy State Daily reports that a separate 1.5-gigawatt data center project has been announced near Casper, promising “billions in investment” and some number of high-skill jobs, though details are sparse.

Cloud and Colocation industry trackers already list multiple existing and planned facilities across the state, concentrated along major transmission corridors and near gas infrastructure. Utilities like Black Hills Energy aggressively market Wyoming as a prime data center location, touting low power costs (for now), cool climate, and “business-friendly” regulation.

Meanwhile, the University of Wyoming runs its own data center for research and campus IT – tiny by hyperscale standards but symbolically important, showing how deeply digital infrastructure is woven into education and local innovation ecosystems.

Not everyone is cheering. A series of investigations by Cowboy State Daily highlights ratepayer fears that residential and small-business customers will end up subsidizing grid upgrades for giant AI tenants. People worry about “soaring electric rates” if utilities build new gas plants or transmission mainly to serve a handful of corporate customers.

WyoFile, an independent outlet, argues that Wyoming should lean into data centers as an economic development tool – but only with strong conditions: tying tax incentives to clear job and training commitments, requiring developers to fund transmission upgrades, and making sure that clean-energy build-out keeps pace.

There’s also a local environmental dimension. Oil City News has covered a proposed $1.2-billion project near Cheyenne promising “water-free operations” via advanced cooling and on-site power, which is exactly the sort of innovation residents want to see, but skepticism remains about how “water-free” and “clean” the operations will really be in practice.

Wyoming is a microcosm of the national debate: big promises, big numbers, genuine opportunities – and equally real risks of higher bills, resource strain, and a lopsided distribution of benefits.

So where do we go from here? The experts you quoted sketch out three intertwined futures: one about infrastructure, one about justice, and one about AI itself.

David Mytton, Researcher at University of Oxford and founder of cybersecurity startup, Arcjet, notes that data centers already consume roughly 4.4% of US electricity (a figure that matches recent CRS and Pew estimates) and could rise to somewhere between 6.7% and 12% by the late 2020s. But, he argues, the real crunch isn’t a national electricity shortage:

“The real problem is where and how quickly that demand hits the grid… The risk is that huge new loads arrive faster than we build transmission and substations, so households and small businesses end up subsidizing infrastructure. States should stop handing out blank-check tax breaks and cheap power, and instead make approvals contingent on data center developers paying for the grid upgrades and the clean energy generation they require. Environmentally, the story is similar: at a national level, data center water use is tiny compared to agriculture or heavy industry, but locally it can be a serious problem if you drop large, water-cooled facilities into already stressed basins. Regulators should treat AI campuses like any other heavy industry: no permits or incentives without hard requirements for additional clean capacity plus transparent reporting of energy and water use.”

Dr. Mytton’s prescription is straightforward but politically tough:

- No blank-check tax breaks or ultra-cheap power deals;

- Mandatory contributions to both transmission and clean-energy build-out;

- And transparent reporting of energy and water use, so communities can judge whether local impacts are acceptable.

That lines up almost perfectly with what DOE and state regulators are now quietly debating.

Robert A. Beauregard, Professor of Urban Planning at Columbia GSAPP, chair of the Doctoral Subcommittee on Urban Planning, comes at this from the city’s point of view. For a municipal official, a data center looks like dream land use:

“Consider the extent to which municipal officials see data centers as a taxable land use that brings few children to the community who would necessitate greater school expenditures, little automobile traffic, and relatively few jobs. I suspect the centers are expensive and will have a high assessed value and pay significant taxes and allow tax rates and residential taxes to be kept down. So, peoples’ energy costs might go up, but their property taxes will not.”

Dr. Beauregard, however, flags several blind spots: environmental issues like noise, water use, and air pollution from backup generators; and the question of what these massive, often fortress-like buildings mean for the landscape and community identity:

“In upstate NY, solar panel arrays have met opposition for how they look and for taking land away from agriculture.”

In places like Wyoming, that aesthetic and cultural dimension matters just as much as the spreadsheets.

From inside the sector, Jay Dietrich, Research Director of Sustainability, at Uptime Institute, underscores “two distinct reactions” to data center growth at the local, state, and national levels:

“One group welcomes the potential economic growth and expansion of the tax base, while the other is concerned about the effect of these facilities on water and electricity supply, transmissions and distribution systems infrastructure, and rates. Those who are alarmed by this rapid growth have become more assertive, advocating for local ordinances or state legislation to prevent excessive electrical rate increases, guide conditions for electrical grid connections, and minimize impacts on the local community and water systems.”

Dietrich argues that operators can’t get away with black-box secrecy anymore:

“Data center operators need to be more forthcoming about their energy and water use and impact on local communities and infrastructure. New data centers can deploy highly energy-efficient cooling systems that are water-free or use a minimal amount of water for a limited number of hours each year. Operators need to address the quantity of their energy and water use, how it changes over the year, its impact on local systems, and how their facility will integrate into the community and minimize resource use.”

There’s a convergence here with trends in design journalism and architecture – aerial photos of more thoughtfully designed campuses, rather than faceless gray bunkers, are not just marketing; they’re part of maintaining public tolerance.

Then there’s the uncomfortable possibility raised by Lars Kotthoff, an Adjunct Proffessor at the University of Wyoming: what if the AI boom driving this build-out doesn’t deliver the transformation its boosters promise?

“The current data center boom is fueled by rising demand for AI, in particular the development of larger AI models that are extremely expensive to train. Whether this will be of long-term benefit remains to be seen, especially as many people and companies are starting to realize that AI is not the panacea the companies selling it make it out to be – current AI models have serious limitations and flaws. This is one of the reasons for increased data center construction, as one school of thought is that with increased scale, these limitations will go away – but this requires substantially more computational resources. In my opinion, it is doubtful whether scaling up AI models will address their limitations and yield benefits that justify the massive expenditure and consumption of resources that large data centers bring with them. The chance that at least some of the data centers currently being built will turn into “white elephants” in a few years is quite high.”

That risk is already visible at the margins: reports of hyperscalers quietly canceling or delaying gigawatts of planned capacity as AI demand forecasts wobble.

Christopher Jones, Faculty Head and Associate Professor of History at Arizona State University’s School of Historical, Philosophical, and Religious Studies, and the author of The Invention of Infinite Growth: How Economists Came to Believe a Dangerous Delusion, widens the lens even further. He breaks the environmental impacts of data centers into three layers:

“The first is the huge amount of electricity they require to operate. The exact environmental impacts of that energy depend on the local energy mix near the data center in question, but generally it involves a significant amount of greenhouse gas emissions as well as possible local pollution from plant emissions. The second is the large amount of water that is necessary to cool the servers. All that computer processing generates a lot of heat that needs to be cooled. In water-scarce regions this looms as the biggest potential hurdle. A third set of environmental impacts concerns the construction of the center and, more importantly, the manufacturing of all the servers. Servers, like most electrical equipment, require many different metals, rare earths, etc. to manufacture. Significantly expanding the use of servers means environmental impact from increased mining for all the necessary components.”

He argues that federal and state regulation should push big tech for stronger environmental protections, but that solutions will vary by region: in Arizona, water may be the limiting factor; in other regions, coal-heavy electricity may be the bigger problem even if water is abundant:

“Regulations at the state and local level are likely to be more effective during the current administration. This may also be wise, because it may make sense to have different policies in different places.”

Jones’s key point is that this is not just an engineering problem; it’s a political one about who bears the burdens of digital infrastructure and who reaps the rewards.

That dovetails with Jeffrey Alan Lockwood’s ethical framing from Wyoming. Dr. Lockwood, Professor of Natural Sciences and Humanities at the University of Wyoming, asks, essentially, whether data centers deliver “the greatest good for the greatest number” – or whether they privatize benefits while socializing costs:

“Much depends on the particular elements of a data center. Some provide services that might be understood as generally helping many people or at least those in more economically privileged situations (e.g., healthcare, financial services, and manufacturing). But the larger data centers, owned by single, large companies for their exclusive use, account for about a quarter of the total.”

Dr. Lockwood notes that:

- Many large, single-tenant facilities primarily benefit already-privileged users and shareholders;

“The biggest winners when it comes to data centers are the powerful corporations and the wealthiest sector of the population that both garner short-term benefits and externalize costs to distant people and times.”

- In states like Wyoming, with no corporate or personal income tax, new workers can actually be a fiscal liability relative to the state services they consume;

“The average Wyoming family receives about $61,263 in state and local services, while paying only about $4,429 in taxes.”

- And the biggest harms – depleted water, higher electricity costs, climate impacts – tend to fall on those least able to insulate themselves: people who can’t easily move or pay for ever-higher cooling bills in a warming world.

His line about “exchanging atmospheric carbon for terrestrial data” puts a sharp edge on the trade-off: we are effectively deciding how much environmental degradation we will tolerate for faster and bigger digital services. As Dr. Lockwood concludes:

“It’s foolish to suggest that all data centers foster environmental injustice, but it’s sensible to ask whether any particular facility, in any given locale, will make the community – or the nation, or the world – a better place to live today and into the foreseeable future… Are we individualizing the benefits and collectivizing the costs?”

Yet as Jesse Kirkpatrick, a research associate professor and the co-director of the Mason Autonomy and Robotics Center at George Mason University, points out, the federal government isn’t just thinking about productivity; it’s thinking about geopolitics:

“The Trump administration’s stance on the surge in data center construction is best understood through its broader commitment to accelerating US AI capabilities and consolidating technological leadership. In Washington, large compute facilities are increasingly viewed as part of the national security ecosystem, not simply private commercial builds. That framing has made federal officials far more inclined to champion rapid expansion, even as questions emerge about water use, land pressures, and grid resilience. In short, data centers are being woven into a larger strategic argument: if the US wants to stay ahead of China’s rapidly scaling AI sector, the country must dramatically expand its computational backbone, and it must do so quickly.”

That logic helps explain why the Trump administration is so eager to speed up permitting and grid connections, even as environmental and consumer concerns mount. Dr. Kirkpatrick predicts a “light-touch” regulatory stance: some gestures toward grid stability and reporting, but very little that would actually slow the pace of construction:

“Industry has deep ties to the federal government in this area, and those relationships tend to produce “cooperative management” rather than formal constraints. So while there may be incremental steps aimed at stabilizing the grid or improving reporting requirements, the dominant priority remains clear: ensuring that American AI development proceeds at maximum speed, even if that means postponing difficult decisions about long-term environmental and infrastructure impacts.”

In practice, that likely means:

- Expansions of federal authority to push interconnection rules;

- Continued tax incentives and loan guarantees;

- And a strong preference for “cooperative management” with industry rather than hard caps or moratoria.

Putting all of this together, a few practical conclusions shake out for places like Wyoming – and frankly, for any town staring down a 500-MW data center proposal:

1) Insist on full transparency.

Communities should demand clear, audited data on projected and actual energy use, water use, and emissions, plus disclosure of what share of power is genuinely additional clean generation versus “paper” offsets.

2) Make developers pay their way.

Echoing Mytton and the EESI, approvals and tax incentives should be contingent on developers funding the necessary grid upgrades and contributing to new clean generation, rather than leaving those costs to ratepayers.

3) Tie incentives to real local benefits.

If a city offers abatements, it should get something tangible in return: local job training pipelines, community benefits funds, or discounted service tiers for residents and small businesses – not just ribbon-cuttings and billboards.

4) Plan for aesthetics and land use, not just kilowatts.

Beauregard and Dietrich’s points about how these facilities look and feel matter. Good design, landscaping, and integration with surrounding uses can reduce opposition and make data centers less of a visual scar.

5) Don’t assume the AI boom will last forever.

Policymakers should be wary of over-committing land, subsidies, or grid capacity to a sector whose demand curve is still highly uncertain. Structuring deals so that communities are not left holding the bag if the AI hype cycle cools is just basic prudence.

6) Treat data centers as heavy industry.

That means applying the same standards we’d apply to a steel mill or refinery: robust environmental review, strong community engagement, and clear rules about who pays for what.

The servers humming away inside these buildings are abstract. The impacts are not.

The way we handle this data center moment – on taxes, on grids, on water, on who benefits – will say a lot about what kind of digital future we’re actually building: one that spreads opportunity, or one that centralizes power in a handful of companies while the rest of us help pay the electricity bill.

The latest news in your social feeds

Subscribe to our social media platforms to stay tuned